More Sophisticated Data for Kids & College Readiness

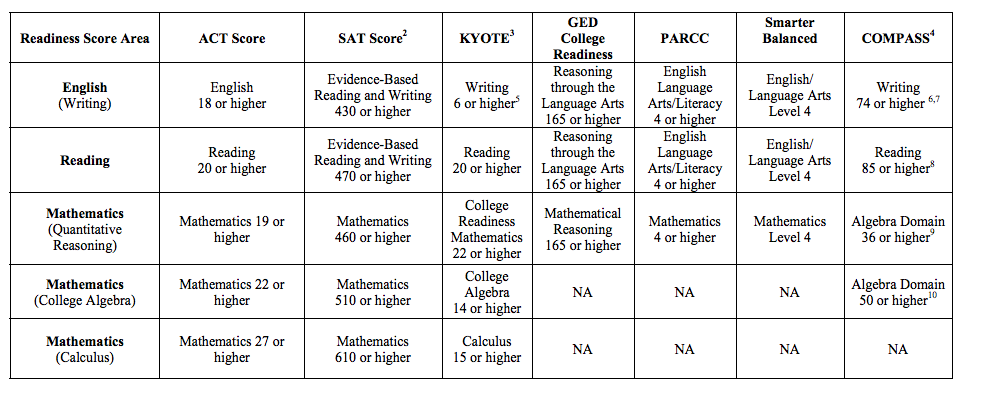

Looking at some of the recent data on College Readiness in Kentucky I was struck by the lack of sophistication in the data. A single standardized exam is used to determine who is and is not ready for College success. While this approach might have been in a step forward helped schools focus on raising ACT, I'm still concerned. It also seems to be against the growing tide of elite colleges moving away from ACT while P-12 might be increasingly swindled. Below is the chart that we use in Kentucky, including with the ACT cut scores (the most prevalent although our internal test KYOTE gets used a decent amount too).

Full chart with footnotes available at: http://cpe.ky.gov/policies/academicinit/deved/

What strikes me is that this is so, so very little information about a given child. It says almost nothing meaningful about either a child's current abilities or a child's future potential. It does say something, but what we should all realize is that the "something" that it says is so far removed from the reality once you get to know the kids.

Of course, that standardized tests tell us very little is nothing new. We have known that for a while so what really concerns me is that we are still doing the same behaviors. Of course, if the definition of "college ready" had little impact on the child, that would be annoying but fine ... but it actually has a massive impact on the child even beginning in high school where students without a 20 Reading ACT, for instance, cannot take dual credit courses to get a jump start on college at low rates. So, to that child, it has both a time and financial implication. When the child graduates high school and tries to enter college, again, the student is set back by this somewhat arbitrary number by having to take remedial classes (that they have to pay for) without obtaining any credit toward graduation. Setting children back like this at the end of high school, of course, is a really good way to set children back in life generally and I think that is pretty much what is happening. If there is a big barrier to entry ... then less students will enter.

I'm not advocating that remedial courses are unnecessary or that we do not need structures to determine college readiness, but if we are going to make these massive decisions about children then our metrics for informing that decision should be as strong as possible. I think we can make better informed decisions in at least 3 ways in the near term and 1 in the long term that we should start planning for now.

- Tap into more of the data currently available on a student. Already, datasets within Infinite Campus here in Kentucky contain a lot of additional information about a kid. How often they come to school. How many, and the types, of disciplinary events. Grades, of course, with varying degrees of detail and consistency. Beyond Infinite Campus, other datasets, like the student's ILP, contain additional information some of which is actually supplied by the kid themselves. Of course, there are also other assessments like MAP and EOC (both of which also have their flaws, but like polls can work better together). All of this makes for a messy mass of data, but making sense of this data seems possible to give a richer understanding of a child's readiness (or merits for graduation, whatever).

- On the grading front, another near term opportunity is helping high schools provide more standardization not in the curriculum but in the systems of assessment. Right now, few people put stock (outside of parents, perhaps) in any individual letter grade at school because it doesn't really contain any descriptive meaning of what a kid can do. A kid might have an exceptionally scientific mind, but miss a couple of assignments and get a C in a biology class. The "C" tells us little and is actually misleading about that kid, which is why I think most college metrics put little stock in high school grades. But, right now, a moment is afoot led by Tom Guskey at UK (this book is a good entry point) to make grading a more informative practice. In the near term, it would make a lot of sense for us to begin pilots with schools engaging in standards based grading to determine how predictive, and which metrics predict most accurately, from the vastly richer dataset of grading.

- Again, near term, why don't we just ask teachers which kids have which skills that we deem relevant to graduation/college? Let's build a smart rubric that asks teachers about kids' skills and ask them only to answer for skills they personally seen demonstrated and for which they could provide evidence. Let's then run mass surveys to collect the data at some point (end of junior year, whatever). Then, to keep it honest, let's follow up with a few random spot checks when we ask teachers to show their evidence of their rubric scoring. Each school could assign a teacher (preferably the Advisory teacher) to complete the rubric for their small group of students. Not only would this encourage proliferation of Advisory models and portfolio systems across P-12, but it would provide a rich external assessment of each child from a skilled educational professional. I'm not sure why we don't do this already, actually.

- Now, long term, we need to begin preparations for the inevitable transition of learning to digital platforms which provide big, big data (like, a LOT more). Soon (hopefully within 10 years), all high schools will be digital in their learning management (some already are). In fact, some states have already taken big steps in this direction. At STEAM here in KY, we use the LMS Canvas for every single course. It worked so well at STEAM that Fayette is now going district-wide and similar LMS solutions are emerging throughout the state. We even have our own Ed. Tech. LMS startup here in Lexington. For a child, nearly every learning activity throughout the whole of high school is digital in a single platform. While this is not perfect data, but it is exponentially more data about a child than we have now. How quickly students complete things, how many times it takes to get it right, how collaborative they are, how much time they spend on their readings, and, of course, their performance on various assessments (hopefully graded with a standards based system). This is already MASSIVE data that mostly just washes over us, but it is only going to exponentially expand over the next data. Honestly, it is hard to fathom how much data we could have. We need systems that talk to each other, we need algorithms that make sense of the data, we need informatic data displays, we need to know which variables have what predictive value ... whew.

If all of this seems like a lot of work ... well, it is. Absolutely it is a lot of work and, obviously, I get the attraction to a single test and a single metric. It is simple and clean. But, kids are not simple and clean. They are complex with messy lives spinning off messy data. They have good days and bad. They have projects they ace and projects they struggle with. Teachers that click and teachers that don't. They are kids and they are all beautiful and intelligent in entirely different ways. A "21" is not in any way an accurate representation of their beauty, intelligence, or potential. We can do better and we can start now.

As a baseball guy, we are clearly already in an era of Moneyball for public education in terms of data, but it is like we are ignoring most of it and still making decisions about kdis based only on 1 metric in 1 game. We have more data, we are just not using it. And, we are going to have a LOT more data very, very soon.

This needs to be a pretty high priority.